AI-generated sexual abuse is spreading faster than we can stop it.

Behind every image is a real person whose life can be shattered in minutes.

See the dataThe reality behind the harm

AI-generated sexual abuse is not hypothetical. It is here, it is scaled, and it is built on top of mainstream internet infrastructure.

A major study found that 48% of U.S. men report having seen deepfake pornography — showing how normalized AI‑generated sexual content has already become.

A 2025 policy brief shows a 550% increase in explicit AI deepfake videos since 2019, reaching 96,000+ videos recorded in 2023 alone.

Research on young people shows that 1 in 17 (≈6%) have already had deepfake nudes created of them — a level of AI‑driven sexual harm without historical precedent.

Ad‑driven amplification

AI sexual abuse tools aren't spreading organically. Investigations show that ad networks and social platforms are directly amplifying them at scale:

Up to 90% of traffic to one of the most popular AI "nudify" apps reportedly came from ads running on Meta platforms.

Investigations uncovered hundreds of paid ads for nudification apps on Facebook and Instagram, despite policies banning sexual exploitation.

Ad engines have sent hundreds of thousands of clicks and millions of impressions to deepfake and nudify tools.

Cut through the obscurity.

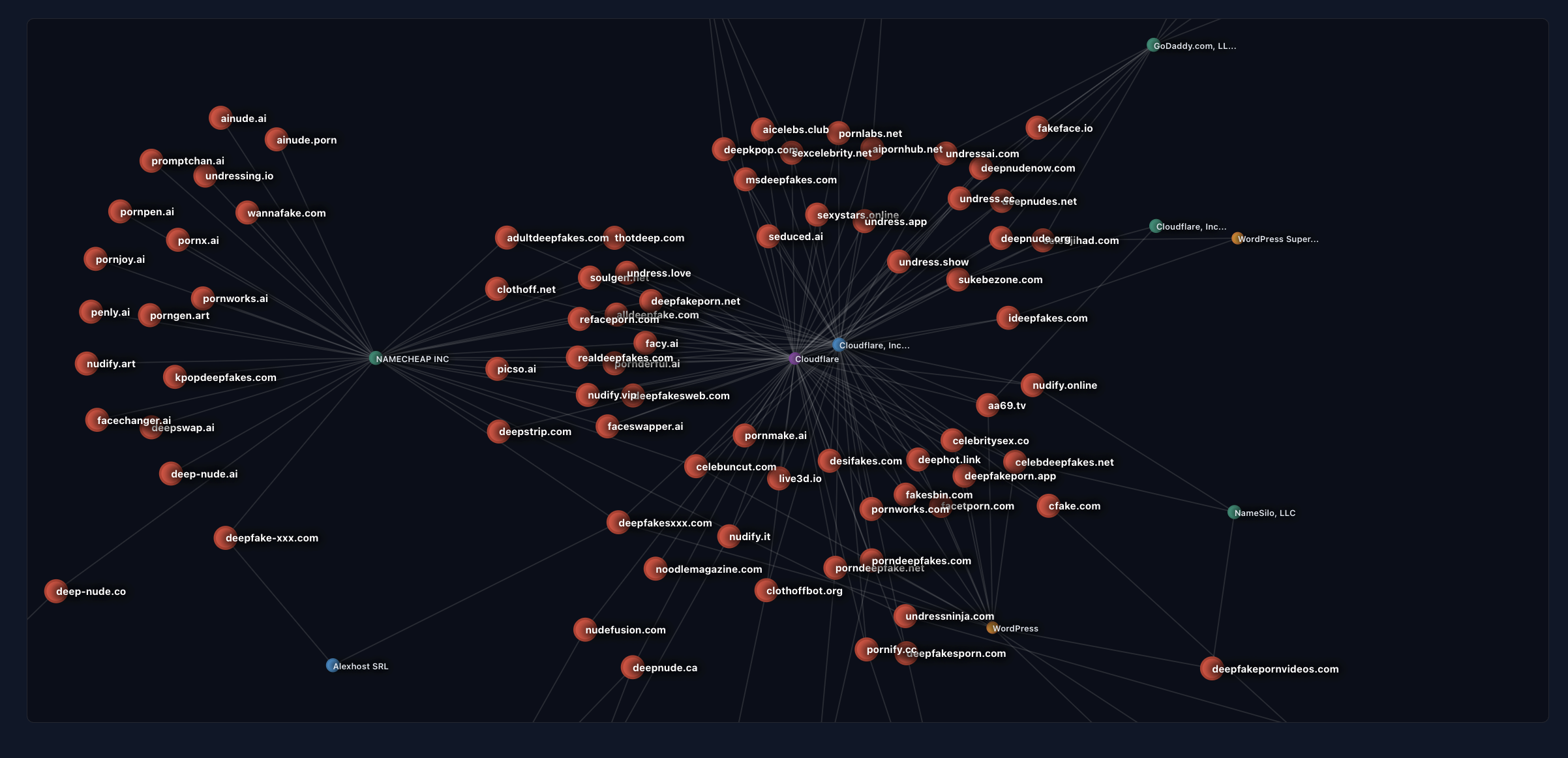

For every URL, the NCII Infrastructure Mapping Tool builds an infrastructure profile and drops it into an interactive network graph.